Important Notice: This Blog Has Moved!

Please note that this blog has moved to Blogspot:

http://johnwhigham.blogspot.com/

All current content has been migrated and future posts will be made to that site only so if you want to follow developments with Osiris please head over there or update any feed subscriptions you have – the RSS Atom for posts on the new site is:

http://johnwhigham.blogspot.com/feeds/posts/default

Apologies for the inconvenience and I hope you continue to enjoy my content on the new site.

John

A Note on Texture Filtering

This is just a short note to highlight the visual impact that mip map generation and texture filtering methods can have on a final rendered result. It’s not rocket science and will be common knowledge to many, but I felt this was a nice clear example of how significant the effects can be and worth sharing.

I’ve recently been playing around with procedural city and road generation which has of course led to rendering of road surfaces for which I am using a trivially simple programmer art texture:

Even though I knew that find detail on textures viewed at extreme angles are always troublesome, feeding this through the normal mip map generation code with the existing trilinear texture filtering however produced a surprisingly disappointing result:

As you can see, the white lines in the road vanish very quickly with distance as their light coloured texels are swamped by the dark road surface around them during mip map down sampling – not at all realistic and bad enough for me to take a second look. The first thing was to look at the mip map generation code to see if it could be persuaded to keep more of the detail that I cared about. Current each mip map level was being generated using a naive 2×2 box filter with uniform weights:

lower_mip_texel = (higher_mip_texel1+higher_mip_texel2+higher_mip_texel3+higher_mip_texel4)/4;

very easy, very fast but also very bad at keeping detail. There are many better filters out there with varying quality/performance tradeoffs but I thought it worth trying a quick and simple change to weight the pixels by their approximate luminosity, calculated by simply averaging each source texel’s red, green and blue channels clamped to a chosen range:

higher_mip_texel_weight = Clamp((higher_mip_texel_red+higher_mip_texel_green+higher_mip_texel_blue)/3, 50, 255)

lower_mip_texel = (

(higher_mip_texel1*higher_mip_texel_weight1)+

(higher_mip_texel2*higher_mip_texel_weight2)+

(higher_mip_texel3*higher_mip_texel_weight3)+

(higher_mip_texel4*higher_mip_texel_weight4)/

(higher_mip_texel_weight1+higher_mip_texel_weight2+

higher_mip_texel_weight3+higher_mip_texel_weight4);

This then favours brighter texels which will be more visible in the final render. Using this code to generate the mip maps instead of the uniformly weighted filter produced immediately better results:

While the white lines are now visible far more into the distance they become disproportionately fat as the lower mip level texels cover more and more screen space. There’s not much that can be done about this as however we filter the texture when making the mip maps we are either going to end up with no white lines or bit fat ones.

The next step then is to stop using trilinear filtering at runtime and instead switch to anisotropic filtering where the filter kernel shape effectively changes with the aspect of the textured surface on screen so the GPU can for example filter a wider area of the texture in the ‘u’ axis than it does in the ‘v’ and has more information for choosing the mip level to read each filter sample from. Returning to the original uniformly weighted mip maps where the lines disappeared so early but switching to anisotropic filtering produces noticible better results than either attempt so far:

and finally for good measure using the weighted mip maps with anisotropic filtering:

Not as dramatic effect as when switching to weighted mips with trilinear filtering but still an improvement and the best result of all.

So why not use anisotropic filtering all the time? well it comes down to compatibility and performance, not all legacy GPUs can support it although most modern cards can and even on cards that do there is a performance cost as the GPU is having to do significantly more work – the effects speak for themselves though and going forward I expect it will become the default setting for most games to avoid over-blurry textures as objects move away from the near plane.

A Tale of Two Thousand Cities

Last post I talked about the perhaps not so interesting topic of data generation and caching, not a subject many people can get very excited about, but this time I want to talk about something I think is far more interesting. City Generation.

I’ve been interested in procedural landscape/planet generation for at least ten years, and over that time have seen at least dozens if not hundreds of landscape projects produced by other people out there who share this interest. One thing many of these projects have in common however is their landscapes however pretty tend to be fairly devoid of human infrastructure. One reason is it’s generally easier due to their inherent fractal nature to generate decent looking mountains, valleys and even trees and grass than it is to produce realistic roads, railways, towns and cities. Heightfield generation for landscapes is also a well covered topic with many professional and amateur research papers and articles on the subject – not so much on infrastructure simulation.

I’m as guilty of this as anyone else – none of the several landscape projects I’ve created in the past could boast anything particularly man made, no roads, no bridges, no buildings or at least none that were arranged in a manner more sophisticated than randomly dotted around on flat bits of ground.

So I thought it about time I got stuck in to what I consider to be a very interesting problem – that of procedural city generation. A tricky problem to be sure but that is of course what makes it interesting.

References

As with embarking upon any other problem, Google is your friend and after a little digging and reading I turned up a number of resources full of ideas on how cities can be generated. I’ve listed a few here that I have found interesting here which should provide an introduction to anyone wishing to take this subject further:

- Procedural Modeling of CitiesYoav I H Parish, Pascal Müllerhttp://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.92.5961&rep=rep1&type=pdf

- A Survey of Procedural Techniques for City GenerationGeorge Kelly, Hugh McCabehttp://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.91.8713&rep=rep1&type=pdf

- Real-time Procedural Generation of `Pseudo Infinite’ CitiesStefan Greuter, Jeremy Parker, Nigel Stewart, Geoff Leachhttp://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.88.7296&rep=rep1&type=pdf

- Procedural City ModelingThomas Lechner, Ben Watson, Uri Wilensky, Martin Felsenhttp://ccl.northwestern.edu/papers/ProceduralCityMod.pdf

There are also various commercial, open source and experimental projects to be found on the net, probably the best known commercial product is the heavyweight City Engine (http://www.procedural.com/cityengine/features.html) while independent projects such as http://www.synekism.com are also worth a look (a city simulation video game focused on dynamically generated content. The project was started is currently maintained by three university students in Canada)

First Things First

Of course before a city can be generated I need to know where to put it in the world – I do have an entire planet to play with here. Eventually I would like to have a system something along the lines of:

- Select a number of random points on the planet’s landmasses that are at least a given distance from each other

- Group clumps of nearby city points into political entities – these will form the countries

- Create Voronoi cells around these point groups – add a little noise and hopefully we have political boundaries for countries

- Use A* or other pathing algorithm to form primary road routes between each city point and it’s neighbours

- Generate cities

However that’s all still on the drawing board so for now I’m just picking points at random that aren’t too close to each other and not too close to water. Simples.

Laying Out The City

The approach I’m taking is to first generate a road network for the city, then to find all the bounded areas enclosed by the roads (I am calling these “charts”) before subdividing these charts into convex “lots” each of which will ultimately receive a building type chosen based upon city parameters and lot placement.

The road network is made up of two types of entity, “road segments” and “junctions”. The starting point for generation is a set of one or more junctions placed randomly within the central area of the city, each of which has between two and five connection points onto which road segments can be added. A loop then continually looks at any junctions in the road network that have connection points without roads attached and tries to add a road segments onto each of them. In addition to adding road segments onto junctions it also looks at any road segments that are only connected at one end – either to a junction or to a preceding road segment – and tries to add another road segment or junction on to that segment.

Make sense? Effectively each iteration of the loop causes the road network to grow by one step, each step either adding a road segment onto a previous one, adding a junction onto a previous road segment or adding a road segment onto a previous junction. Each road segment is given a “generation” number, the ones created from the initially placed junctions are generation zero and then every time a junction is added on to the end of a road segment which then spawns further road segments that aren’t continuations of the original road these child segments are given a higher generation number, 1, 2, 3, etc. This generation number can then be used later when deciding on the type of road geometry to create – generation #0 could be dual carriageway for example, generation #1 main roads and generation #2 narrower secondary streets.

Proximity constraints are applied at each step to prevent roads being created that would be too close to existing roads or junctions, and connection tests are made to see if an open road end is close enough to a junction with an open connection point to allow the two to be connected. The amount of variation in road heading also changes with generation and distance from the centre of the city – so low generation roads tend to be straighter while high generation (i.e. minor roads) tend to wriggle around more creating the suburbs. The amount of variation also increases as roads are further from the city centre.

To illustrate the process, these three images are sequential representations of the generation of one city’s road network:

In these images the Green roads are generation zero, Yellow is one, Blue is two, Magenta is three and Red is four. You will see that at certain points it appears to skip a generation (a red road directly from a blue one for example) – this is a result of a road segment from the intermediate generation being created then removed due to a proximity constraint violation but then the cause of the violation in turn being later removed due to a violation of it’s own allowing a new road of a later generation to replace the original.

Once no more roads or junctions can be created due to proximity constraints or distance from city centre checks the process terminates.

Charting it up

Once the entire road network has been created the next step is to find all the enclosed areas (which I call “charts”) which will eventually provide the lots for buildings to be placed on. To do this I first use Andrew’s Monotone Chain Algorithm to find the convex hull that encloses the entire road network, tracing around from any exterior road vertex with this then produces one big chart enclosing the outskirts of the city. Tracing around from all other uncharted vertices in turn then adds a number of additional charts each representing an enclosed area of the city.

The charts produced from the above road network look like this:

As you can see each chart represents a connected area of the city entirely enclosed by roads or the city boundary. They are pretty much all concave though and hard to work with further due to this complexity, so the next step is to break these charts down into convex “lots” onto which individual buildings can be placed. There are several ways this can be done, and I tried a few of them to see which worked best.

First I tried combining “ears” of the charts into convex regions. Ear clipping is a common algorithm for triangulating complex polygons and involves finding sets of three adjacent vertices where the vector between the first and third vertex doesn’t cross any other edge of the polygon, effectively forming a corner or “ear” – you keep doing this until you have no polygon left which as long as there aren’t any holes is guaranteed to occur. The downside of this approach is that you end up with all sorts of random shapes many of which are narrow slivers or shards not very well suited to building lot usage.

Next I tried finding pairs of vertices in the polygon that were as diametrically opposite as possible while having a vector between them that didn’t intersect any other polygon edge. The thought was that this would continually chop the polygon roughly in half producing more even lot distribution but in practice the shapes produced were no more regular and still not suitable.

Finally the solution I settled on was to find the convex hull of each chart (again using Andrew’s Monotone Chain) and from this find the vector representing the hull’s longest axis. This was done by summing the length of each edge into buckets each representing an angle range (10 degrees in my case) then taking the angle from the bucket with the longest total edge length accumulated. The chart was then rotated by this angle to align this longest axis with the Y axis before finally clipping the chart into two pieces across the middle in the X axis to form two new charts. Repeatedly doing this until the individual charts are small enough to count as lots produces a set of output charts many but not all of which are convex – I decided to classify the convex ones as building lots and the remaining concave ones as greenfield lots for parks, car parks or other non-building purposes.

The results of this lot subdivision on the above city looks like this:

Here the green lots are suitable for building, the red lots are concave and thus classified for parks or similar and the blue lots are isolated from the road network and not suitable for use at all. Removing the isolated lots and tweaking the colours produces this:

Here the grey charts are suitable for building with the green ones representing parks – the red charts have been classified as being too small to be considered further – in practice these would probably end up being simple tarmac areas suitable for street furniture. Although it’s far from perfect I’m not too unhappy with the result – it could be better, I would like to see more consistently useful lot shapes for example but it may be good enough to be getting on with.

The final step is taking the road network and lots and generating renderable geometry from them, after that’s it’s building selection and construction which I haven’t got to yet. This is how the road and lot network looks in the engine for now anyway:

And that’s it as it stands. The city generator is deterministic and seeded from the city’s world position (and in the future it’s physical/political parameters) so I’ve many cities all around my planet of varying sizes and complexity, the next step is building generation and placement. Procedural architectural generation is a whole new bag, so it’s back to Google for me…

Generating (a lot of) Data

In my previous post I introduced the Osiris project that I’ve started working on and outlined the basic construction system for the planet I’m trying to procedurally build. With that set up, the next step was to create a system for generating and storing the multitude of data that is required to represent an entire planet.

Now planets are pretty large things and the diversity and quantity of data that is required to represent one at even fairly low fidelity gets very large very quickly. A requirement of this system though is that I want to be able to run my demo and immediately fly down near to the surface to see what’s there – I don’t want to have to sit waiting for minutes or hours while it churns away in the background building everything up.

For this to work I obviously need some form of asynchronous data generation system that can run in the background spitting out bits and pieces of data as quickly as possible while the main foreground thread is dealing with the user interface, camera movement and most importantly rendering the view.

This fits quite well with modern CPUs where the number of logical cores and hardware threads is continuing to rise providing increasing scope for such background operations, but that does also mean that the data generation system needs to be able to run on an arbitrary number of threads rather than just a single background one. An added bonus of such scalability is that time can even be stolen from the primary rendering thread when not much else is going on – for example when the view is stationary or when the application is minimised.

Ultimately this work should be able to be farmed off to secondary PCs in some form of distributed computing system or even out into the cloud – but to support that data generation has to be completely decoupled from the rendering and able to operate in isolation. Even if such distribution never happens though designing in such separation and isolation is still a valuable architectural design goal.

So I need to be able to generate data in the background, but to achieve my interactive experience I also need it to be generating the correct data in the background, which in this case means that at any given moment I want it to be generating data for the most significant features that are closest to the viewpoint. This determination of what to generate also needs to be highly dynamic as the viewpoint can move around very quickly – thousands or even tens of thousands of miles per hour at times – so it’s no good queuing up thousands of jobs, the current set of what’s required needs to be generated and maintained on the fly.

Finally as generation of data can be a non-trivial process the system needs to be able to cache data it’s already generated on disk for rapid reloading on subsequent runs or even for later on in the same run if the in-memory data had to be flushed to keep the total memory footprint down. I can’t simply cache everything however as for an entire planet the amount of data for the level of fidelity I want to reproduce can easily run into terabytes so it’s important to only cache up to a realistic point – say a few gigabytes worth – with the rest being always generated on demand.

To maximise the effectiveness of disk caching I also want to include compression in the caching system – the computation overhead of a standard compression library such as zlib shouldn’t be exorbitantly expensive compared to the potentially gigabytes of saved disk space.

This is quite a shopping list of requirements of course, which brings home the unavoidable complexity of generating high fidelity data on a planetary scale, but even non-optimal solutions to these primary requirements should allow me to build on top of such a generic data generation system and start to look at the planetary infrastructure generation and simulation work that I am primarily interested in.

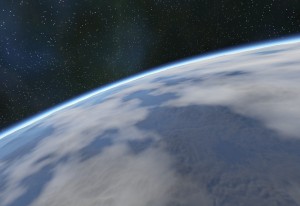

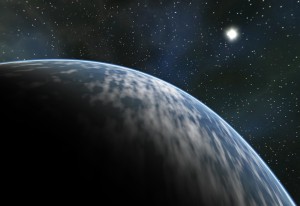

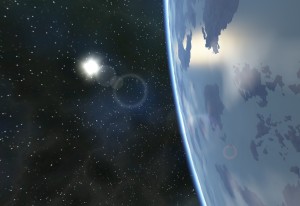

Unfortunately of course data generation architecture lends itself only so well to pretty pictures so rather than some dull boxes and lines representation of data flow the images with this post show the atmospheric scattering shader that I’ve also recently added – it’s probably the single biggest improvement in both visual impact and fidelity and suddenly makes the terrain look like a planet rather than just a textured ball – more on this atmospheric shader in a later post.

2010 in review

The stats helper monkeys at WordPress.com mulled over how this blog did in 2010, and here’s a high level summary of its overall blog health:

The Blog-Health-o-Meter™ reads This blog is doing awesome!.

Crunchy numbers

A helper monkey made this abstract painting, inspired by your stats.

The Leaning Tower of Pisa has 296 steps to reach the top. This blog was viewed about 1,200 times in 2010. If those were steps, it would have climbed the Leaning Tower of Pisa 4 times

In 2010, there were 16 new posts, not bad for the first year! There were 87 pictures uploaded, taking up a total of 13mb. That’s about 2 pictures per week.

The busiest day of the year was November 10th with 66 views. The most popular post that day was A note on voxel cell LOD transitions.

Where did they come from?

The top referring sites in 2010 were

gamedev.net, wiftcoder.wordpress.com and wiki.blitzgamesstudios.com

Some visitors came searching, mostly for voxel lod, gpu clipmap, world lod solutions, nvidia gpu enerated landscapes, and octave isosurface sphere.

Attractions in 2010

These are the posts and pages that got the most views in 2010.

A note on voxel cell LOD transitions July 2010

Procedural News May 2010

Introduction to Geo May 2010

Planetary Basics May 2010

Links May 2010

A Note on Co-ordinate Systems

Before delving deeper into terrain construction I thought a brief note on co-ordinate systems would be worthwhile. Stellar bodies come in all shapes and sizes but as I live on planet Earth like most people basing my virtual planet upon this well known baseline makes a lot of sense.

Now the Earth isn’t quite a perfect sphere so it’s radius varies but it’s volumetric mean radius is about 6371 Km so that’s a good enough figure to go on. Most computer graphics use single precision floating point numbers for their calculations as they are generally a bit faster for the computer to work with and are universally supported by recent graphics cards, but with only 23 bits for the significand they have only seven significant digits which can be a real problem when representing data on a planetary scale.

Simply using a single precision floating point vector to represent the position of something on the surface of our Earth sized planet for example would give an effective resolution of just a couple of meters, possibly good enough for a building but hardly useful for anything smaller and definitely insufficient for moving around at speeds lower than hundreds of kilometers per hour. Trying to naively use floats for the whole pipeline and we quickly see our world jiggling and snapping around in a truly horrible manner as we navigate.

Moving to the use of double precision floating point numbers is an obvious and easy solution to this problem however as with their 52 bit significand they can easily represent positions down to millionths of a millimetre at planetary scale which is more than enough for our needs. With modern CPUs their use is no longer as prohibitively expensive as they used to be in performance terms either with some rudimentary timings showing only a 10%-15% drop in speed when switching core routines from single to double precision. Also the large amounts of RAM available now make the increased memory requirement of doubles easily justified, the problem of modern GPUs using single precision however remains as somehow we have to pipe our double resolution data from the CPU through the single precision pipe to the GPU for rendering.

My solution for this is simply to have the single precision part of the process, namely the rendering, take place in a co-ordinate space centred upon the viewpoint rather than the centre of the planet. This ensures that the available resolution is being used as effectively as possible as when the precision falls off on distance objects these are by nature very small on screen where the numerical resolution issues won’t be visible.

To make this relocation of origin possible, I store with each tile it’s centre point in world space as a double precision vector then store the vertex positions for the tile’s geometry components as single precision floats relative to this centre. Before each tile is rendered, the vector from the viewpoint to the centre of the tile in double precision space is calculated and used to generate the single precision complete tile->world->view->projection space matrix used for rendering.

In this way the single precision vertices are only ever transformed to be in the correct location relative to the viewpoint (essentially the origin for rendering) to ensure maximum precision. The end result is that I can fly from millions of miles out in space down to being inches from the surface of the terrain without any numerical precision problems.

There are of course other ways to achieve this, using nested co-ordinate spaces for example but selective use of doubles on the CPU is both simple and relatively efficient in performance and memory costs so feels like the best way.

Of course numerical precision issues are not limited to the positions of vertices, another example is easily found with the texture co-ordinates used to look up into the terrain textures. On the one hand I want a nice continuous texture space across the planet to avoid seams in the texture tiling but as the texture co-ordinates are only single precision floats there simply isn’t the precision for this. Instead different texture scales have to be used at different terrain LOD levels and the results somehow blended together to avoid seams – I’m not going to go to deeply in to my solution for that here as I think it’s probably worth a post of it’s own in due course.

Osiris, the Introduction

Continuing the theme of procedurally generated planets, I’ve started a new project I’ve called Osiris (the Egyptian god usually identified as the god of the Afterlife, the underworld and the dead) which is a new experiment into seeing how far I can get having code create a living breathing world.

Although my previous projects Isis and Geo were both also in this vein, I felt that they each had such significant limitations in their underlying technology that it was better to start again with something fresh. The biggest difference between Geo and Osiris is that where the former used a completely arbitrary voxel based mesh structure for its terrain Osiris uses a more conventional essentially 2D tile based structure. I decided to do this as I was never able to achieve a satisfactory transition effect between the level of details in the voxel mesh leaving ugly artifacts and, worse, visible cracks between mesh sections – both of which made the terrain look essentially broken.

After spending so much time on the underlying terrain mesh systems in Isis and Geo I also wanted to implement something a little more straightforward so I could turn my attention more quickly to the procedural creation of a planetary scale infrastructure – cities, roads, railways and the like along with more interesting landscape features such as rivers or icebergs. This is an area that really interests me and is an immediately more appealing area for experimentation as it’s not an area I have attempted previously. Although a 2D tile mesh grid system is pretty basic in the terrain representation league table, there is still a degree of complexity to representing an entire planet using any technique so even that familiar ground should remain interesting.

The first version shown here is the basic planetary sphere rendered using mesh tiles of various LOD levels. I’ve chosen to represent the planet essentially as a distorted cube with each face represented by a 32×32 single tile at the lowest LOD level. While the image below on the left may be suitable as a base for Borg-world, I think the one on the right is the basis I want to persue…

While mapping a cube onto a sphere produces noticeable distortion as you approach the corners of each face, by generating terrain texturing and height co-ordinates from the sphere’s surface rather than the cube’s I hope to minimise how visible this distortion is and it feels like having what is essentially a regular 2D grid to build upon will make many of the interesting challenges to come more manageable. The generation and storage of data in particular becomes simpler when the surface of the planet can be broken up into square patches each of which provides a natural container for the data required to simulate and render that area.

At this lowest level of detail (LOD) each face of the planetary cube is represented by a single 32×32 polygon patch. At this resolution each patch covers about 10,000 km of an Earth sized planet’s equator with each polygon within it covering about 313 km. While that’s acceptable when viewing the planet from a reasonable distance in space as you get closer the polygon edges start to get pretty damn obvious so of course the patches have to be subdivided into higher detail representations.

I’ve chosen to do this in pretty much the simplest way possible to keep the code simple and make a nice robust association between sections of the planet’s surface and the data required to render them. As the view nears a patch it gets recursively divided into four smaller patches each of which is 32×32 polygons in it’s own right effectively halving the size of each polygon in world space and quadrupling the polygonal density.

Here you can see four stages of the subdivision illustrated – normally of course this would be happening as the view descended towards the planet but I’ve kept the view artificially high here to illustrate the change in the geometry. With such a basic system there is obviously a noticeably visible ‘pop’ when a tile is split into it’s four children – this could be improved by geo-morphing the vertices on the child tile from their equivalent positions on the parent tile to their actual child ones but as the texturing information is stored on the vertices there is going to be a pop as the higher frequency texturing information appears anyway . Another option might be to render both tiles during a transition and alpha-blend between them, a system I used in the Geo project with mixed results.

LOD transitions are a classic problem in landscape systems but I don’t really want to get bogged down in that at the moment so I’m prepared to live with this popping and look at other areas. It’s a good solid start anyway though I think and with some basic camera controls set up to let me fly down to and around my planet I reckon I’m pretty well set up for future developments.

A note on voxel cell LOD transitions

I recently received a question from a developer who has kindly been reading my blog asking about how I tackled the joins between voxel cells of differing resolutions. This was one of the most challenging problems raised by the voxel terrain system and so rather than put my response in a mail I thought it might be more useful to make it public for anyone else who visits.

I’ll start by saying however that after devoting time to several different strategies I never did come to a solution that I was entirely happy with – each had it’s problems some more objectionable than others but I’ll relate each experiments here…maybe someone else can take something from them and improve the results.

Before getting to my solutions, it’s probably worth first describing the problem: essentially the whole environment is made up of cube shaped cells of voxel data, each one containing geometry representing the iso-surface within that cube. The number of sample points and therefore the iso-surface resolution within each cell is constant but to keep the amount of data in memory and being rendered reasonable, cells close to the camera represent small areas of the world while cells further away represent proportionally larger areas. In a manner similar to mip-mapping for textures, each level of cells is twice as large in the world as the ones from the preceding level. Near the camera for example a cell might cover 10 metres of world space while moving into the middle distance would switch to 20 metre cells, then 40 metre, then 80 and so on.

While a continuous representation would improve the consistency of triangle size over the view distance, the management of a cell-based approach is far simpler as individual cells can be generated, cached and retrieved very simply. The downside however is the exactly the problem asked about – where a 10m cell abuts a 20m cell for example, every other sample point on the 10m cell will have no corresponding sample point in the 20m cell and we end up with a floating vertex that in most cases does not line up with the edge of the triangle in the 20m cell it is next to and we get a visual crack in the world geometry.

The first and method I tried was to simply displace the floating vertex along it’s normal by some distance based upon how far it was from the viewpoint. This correlates to other simple techniques for hiding LOD cracks in 2D height fields where verts can be moved ‘up’ by some amount to hide the crack – it works to a degree in 3D too as I found and could often hide the resultant crack but unfortunately it wasn’t reliable as sometimes the normal didn’t happen to point in the best direction to hide the crack, and sometimes the nature of the voxel data itself meant that no sensible amount of movement could hide the resultant crack regardless of direction due to rapidly changing curvatures or other high frequency detail.

The second experiment was to try to create ‘fillet’ geometry that would connect the hanging vertices from the higher detail cells to the vertices in the lower detail cells. I have used this system in the past on 2D height fields to eliminate cracks and while it’s a bit fiddly it does guarantee crack free results. Unfortunately I had underestimated just how complex it is to extend this system from 2D to 3D – the sheer number of cases that needs to be handled to enable low detail sub-cube sides to map to higher detail sides in all possible configurations is the biggest problem, especially where a cell has a mixture of higher and lower details neighbours.

I achieved limited success with this but didn’t manage to get it working satisfactorily, eventually putting it to one side as I tried to find a less fiddly solution.

The final solution that I ended up with was to render cells that had neighbours of differing resolutions twice, calculating an alpha value in the pixel shader based upon the proximity of the point to the edge of the cell where the resolution changed. By enabling alpha-as-coverage rendering this produced a screen space dissolve effect that while inferior to correctly connecting geometry was actually fairly visually unobtrusive in most cases – the higher frequency terrain detail fades in as you approach which hides the transition between LODs fairly well especially when combined with a degree of atmospheric fog.

It’s not perfect though and looks a little peculiar where there is high frequency detail producing large differences between LODs or when the viewpoint is moving slowly and part cross-faded features remain on screen for significant periods of time. There is also of course a performance cost of rendering some cells twice but it’s the least evil solution I came up so I left it in.

This is one of those problems where there feels like there should be a neat solution out there somewhere but so far my internet research has not turned one up – off-line rendering solutions such as subdividing down so cells are less than one pixel across are not exactly practical! If you do come across any improved solutions however or develop any of your own, do please post a comment or drop me a line – I would be very interested to hear about them.

Captain Coriolis

Continuing the theme of clouds, I’ve been looking at a few improvements to make the base cloud effect more interesting, so with the texture mapping sorted what else can we do with the clouds? Well because the texture is just a single channel intensity value at the moment we can monkey around with the intensity in the shader to modify the end result.

The first thing we can do is change the scale and threshold applied to the intensity in the pixel shader. The texture is storing the full [0, 1] range but we can choose which part of this to show to provide more or less cloud cover:

Here the left most image is showing 50% of the cloud data, the middle one just 10% and the right hand one 90%. Note that on this final image the contrast scalar has also been modified to produce a more ‘overcast’ type result. Because these values can be changed on the fly it leaves the door open to having different cloud conditions on different planets or even animating the cloud effect over time as the weather changes.

Next I thought it would be interesting to add peturbations to the cloud function itself to break up some of it’s unrealistic uniformity. First a simple simulation of the global Coriolis Effect that essentially means that the clouds in the atmosphere are subject to varying rotational forces as they move closer to or further away from the equator due to the varying tangental speeds at differing lattitudes.

The real effect is of course highly complex but with a simple bit of rotation around the Y axis based upon the radius of the planet at the point of evaluation I can give the clouds a bit of a twist to at least create the right impression:

Here the image on the left is without the Coriolis effect and the image on the right is with the Coriolis effect. The amount of rotation can be played with based on the planet to make it more or less dramatic but even at low levels the distortion in the cloud layer that it creates really helps counter-act the usual grid style regularity you usually get with noise based 3D effects.

With the mechanism in place to support the global peturbation from the Coriolis effect, I then thought it would be interesting to have a go at creating some more localised distortions to try to break up the regularity further and hopefully make the cloud layer a bit more realistic looking or at least more interesting.

The kind of distortions I was after were the swirls, eddies and flows caused by cyclical weather fronts, mainly typhoons and hurricanes of greater or lesser strength. To try to achieve this I created a number of axes in random directions from the centre of the planet each of which had a random area of influence defining how close a point had to be to it to be affected by it’s peturbation and a random strength defining how strongly affected points would be influenced.

Each point being evaluated is then tested against this set of axes (40 currently) and for each one if it’s close enough it is rotated around the axis by an amount relative to it’s distance from the axis. So points at the edge of the axis’ area of influence hardly move while points very close to the axis are rotated around it more. This I reckoned would create some interesting swirls and eddies which in fact it does:

It can produce some fairly solid looking clumps which is not great but on average I think it adds positively to the effect. (Looking at the type of distortion produced I suspect it may also be useful for creating neat swirly gas planets or stars with an appropriate colour ramp – something for the future there I hope).

So far I’ve got swirly white clouds but to make them seem a bit more varied the next thing I tried was to modulate not just the alpha of the cloud at each point but the shade as well. At first I was going to go with a second greyscale fBm channel in the cube map using different lacunarity and scales to produce a suitable result, but then I thought why not use the same channel but just take a second sample from a different point on the texture. This worked out to be a pretty decent substitute, by taking a sample from the opposite side of the cube map from the one being rendered and using this as a shade value rather than an alpha it introduces some nice billowy peturbations in the clouds that I think helps to give them an illusion of depth and generally look better:

The main benefit of re-using the single alpha channel is it leaves me all three remaining channels in my 32bit texture for storing the normal of the clouds so I can do some lighting. Now for your usual normal mapping approach you only need to store two components of the surface normal in the normal map texture as you can work out the third in the pixel shader, but doing this means you lose the sign of the re-constituted component. This isn’t a problem for usual normal mapping where the map is flat and you use the polygon normal, bi-normal and tangent to orient it appropriately, but in my case the single map is applied to a sphere so the sign of the third component is important. Fortunately having three channels free means I can simply store the normal raw and not worry about re-constituting any components – as it’s a sphere I also don’t need to worry about transforming the normal from image space to object space.

Calculating the normal to store is an interesting problem also, I tried first using standard techniques to generate it from the alphas already stored in the cube map but this understandibly produced seams along the edges of each cube face. Turns out a better way is to treat the fBm function as the 3D function it is, and use the gradient of the function at each point to calculate the normal – this is essentially the same way as the normals are calculated for the landscape geometry during the marching cubes algorithm.

With the normal calculated and stored, I then put some fairly basic lighting calculations into the pixel shader to do cosine based lighting. The first version produced very harsh results as there isn’t really any ambient light to fill in the dark side of the clouds so I changed it to use a fair degree of double sided lighting to smooth out the effect and make it a bit more subtle/believable.

Shown below is the planet without lighting, the generated normal map, the lighting component on it’s own and finally the lighting component combined with the planet rendering:

As you can see from the two right hand images there are still some problems with the system as it stands – the finite resolution of the cube map means there are obvious texel artifacts in the lighting where the normals are interpolated for a start – but I think it’s still a worthwhile addition.

Although I’m not 100% happy with the final base cloud layer effect I think that’s probably enough for now, I’m currently deciding what to do next – possibly try positioning my planet and sun at realistic astronomical distances and sizes to check that the master co-ordinate system is working. This is not visually fun but necessary for the overall project although it will probably also require some work on the camera control system to make moving between and around bodies at such distances workable.

More interestingly I might try adding some glare and lens flare to the sun rendering to make it a bit more visually pleasing – the existing disc with fade-off is pretty dull really.

Clouding the Issue

Taking a break from hills and valleys, I thought I would have a go at adding some clouds to the otherwise featureless sky around my planets. There are various methods employed by people to simulate, model and render clouds depending on their requirements, some are real-time and some are currently too slow and thus used in off-line rendering – take a look at the Links section for a couple of references to solutions I have found out on the net.

Although I would like to add support for volumetric clouds at some point (probably using lit cloud particle sprites of some sort) to get things underway I thought a single background layer of cloud would be a good starting point. Keeping to my ethos, I do of course want to generate this entirely procedurally if at all possible and as with so many other procedural effects a bit of noise is a good starting point.

In this case while I get the mapping and rendering of the cloud layer working I’ve opted to use a simple fBm function (Fractional Brownian Motion) that combines various octaves of noise at differing frequencies and amplitudes to produce quite a nice cloudy type effect. It’s not convincing on it’s own and will need some more work later but for now it will do.

Now the fBm function can be evaluated in 3D which is great but of course to render it on the geodesic sphere that makes up my atmosphere geometry I need to map it somehow into 2D texture space – I could use a full 3D texture here which would make it simple but would also consume a vast amount of texture memory most of which would be wasted on interior/exterior points so I’ve decided not to do that. I could also evaluate the fBm function directly in the shader but as I want to add more complex features to it I want to keep it offline.

The simplest way to map the surface of a sphere into 2D is to use polar co-ordinates where ‘u’ is essentially the angle ‘around’ the sphere and ‘v’ the elevation up or down. This is simple to calculate but produces a very uneven mapping of texture space as the texels are squeezed more and more towards the poles producing massive warping of the texture data.

The two images here show my basic fBm cloud texture and that same texture mapped onto the atmosphere using simple polar co-ordinates.

(ignore the errant line of pixels across the planet – this is caused by an error of some sort in the shader that I couldn’t see on inspection and I didn’t want to spend time on it if the technique was going to be replaced anyway)

The distortion caused by the polar mapping is very obvious when applied to the cloud texture with significant streaking and squashing evident eminating from the pole – obviously far from ideal.

One way to improve the situation is the apply the inverse distortion to the texture when you are generating it, in this case treating texture generation as a 3D problem rather than a 2D one by mapping each texel in the cloud texture onto where it would be on the sphere after polar mapping and evaluating the fBm function from that point. As can be seen in the images below this produces a cloud texture that gets progressively more warped towards the regions that will ultimately be mapped to the poles so it looks wrong when viewed in 2D as a bitmap, but should produce better results when mapped onto the sphere:

As you can see in the planetary image, the streaking and warping is much reduced using this technique and the cloud effect is almost usable but if you look closely you will see that there is still an artifact around the pole albeit a much smaller one – it would be significantly larger when viewed from the planet’s surface however so is still not acceptable. One way to ‘cheat’ around this problem is to simply ensure that cloud cover at the pole is always 100% cloud or 100% clear sky to hide the artifact and some released space based games that don’t require you to get very close to the planets do this very effectively, but in a world of infinite planets it’s a big restriction that I don’t want to have to live with.

Another downside of polar mapping is that the non-uniform nature of the texel distribution means that many of the texels on the cloud texture aren’t really contributing anything to the image depending on how close to the pole they are which is a waste of valuable texture memory.

So if polar mapping is out what are we left with? Well next I thought it was time to drop it altogether and move on to cubic mapping, a technique usually employed for reflection/environment mapping or directional ambient illumination. With this technique we generate not one cloud texture but six each representing one side of a virtual cube centred around our planet. With this setup when shading a pixel the normal of the atmospheric sphere is intersected with the cube and the texel from that point used. The benefit of cube mapping is that there is no discontinuity around the poles so it should be possible to get a pretty even mapping of texels all around the planet, making better use of texture storage and providing a more uniform texel density on screen amongst other things.

So ubiquitous is cube mapping that graphics hardware even provides a texture sampler type especially for this so we don’t even need to do any fancy calculations in the pixel shader, we simply sample the texture using the normal directly which is great for efficiency. The only downside is that now we are storing six textures instead of one the memory use does go up, so I’ve dropped the texture size from 1024×1024 to 512×512 but as each texture is only mapped onto 1/6th of the surface of the sphere the overally texel density actually increases and the more effective use of texels means the 50% increase in memory usage is worthwhile.

The two images below show how one face of the texture cube and the planet now looks with this texture cube applied:

Again it’s different but the edges of the cube are pretty clear and ruin the whole effect, so as a final adjustment we again need to apply the inverse of the distortion effect implied by moving from a cube to a sphere and map the co-ordinates of the points on our texture cube onto the sphere prior to evaluating fBm:

Finally we have a nice smooth and continuous mapping of the cloud texture over our sphere. Result! To show how effective even a simple cloud effect like this can be in adding interest to a scene here is a view from the planet surface with and without the clouds:

There is obviously more to do but I reckon it’s a decent start.